Urban

Effects

People

World News

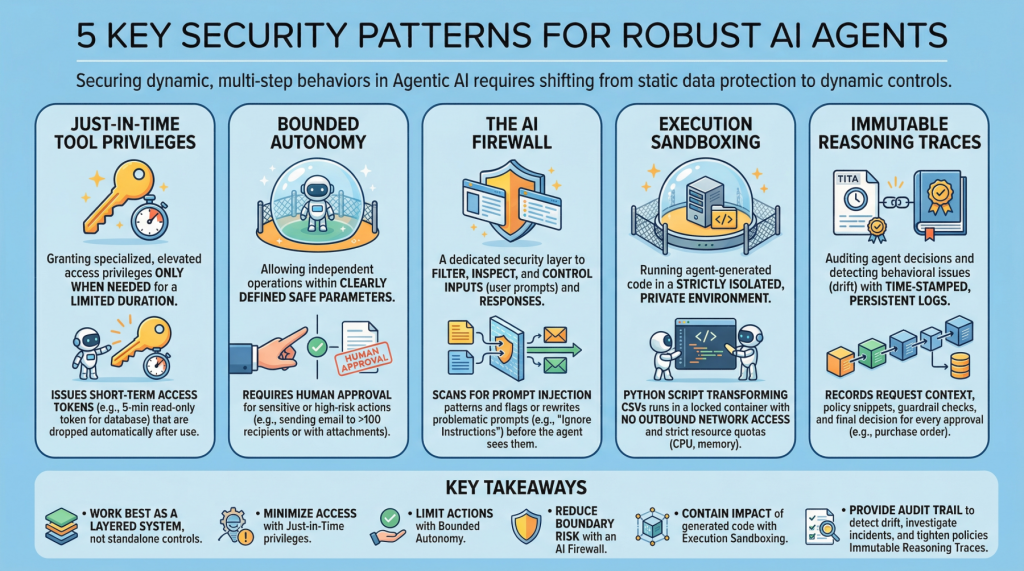

5 Essential Security Patterns for Robust Agentic AI

5 Essential Security Patterns for Robust Agentic AIImage by Editor Introduction Agentic AI, which revolves around autonomous software entities called agents, has reshaped the AI landscape and influenced many of its most visible developments and trends in recent years, including applications built on generative and language models. With any major technology wave like agentic…

Google AI Releases Android Bench: An Evaluation Framework and Leaderboard for LLMs in Android Development

Google has officially released Android Bench, a new leaderboard and evaluation framework designed to measure how Large Language Models (LLMs) perform specifically on Android development tasks. The dataset, methodology, and test harness have been made open-source and are publicly available on GitHub. Benchmark Methodology and Task Design General coding benchmarks often fail to capture…

OpenAI Introduces Codex Security in Research Preview for Context-Aware Vulnerability Detection, Validation, and Patch Generation Across Codebases

OpenAI has introduced Codex Security, an application security agent that analyzes a codebase, validates likely vulnerabilities, and proposes fixes that developers can review before patching. The product is now rolling out in research preview to ChatGPT Enterprise, Business, and Edu customers through Codex web. Why OpenAI Built Codex Security? The product is designed for…

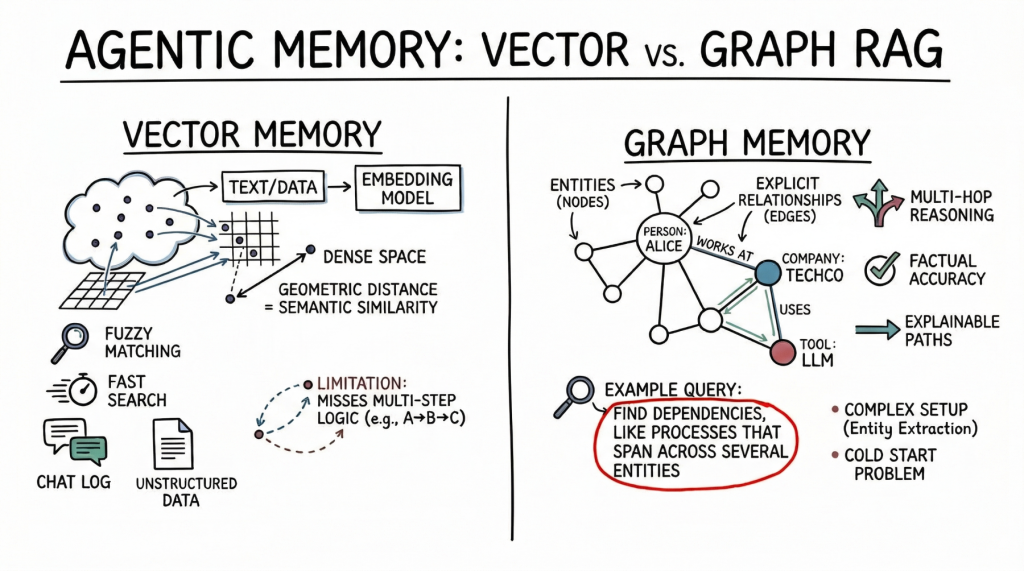

Vector Databases vs. Graph RAG for Agent Memory: When to Use Which

In this article, you will learn how vector databases and graph RAG differ as memory architectures for AI agents, and when each approach is the better fit. Topics we will cover include: How vector databases store and retrieve semantically similar unstructured information. How graph RAG represents entities and relationships for precise, multi-hop retrieval. How…

Liquid AI Releases LocalCowork Powered By LFM2-24B-A2B to Execute Privacy-First Agent Workflows Locally Via Model Context Protocol (MCP)

Liquid AI has released LFM2-24B-A2B, a model optimized for local, low-latency tool dispatch, alongside LocalCowork, an open-source desktop agent application available in their Liquid4All GitHub Cookbook. The release provides a deployable architecture for running enterprise workflows entirely on-device, eliminating API calls and data egress for privacy-sensitive environments. Architecture and Serving Configuration To achieve low-latency…

A Coding Guide to Build a Scalable End-to-End Machine Learning Data Pipeline Using Daft for High-Performance Structured and Image Data Processing

In this tutorial, we explore how we use Daft as a high-performance, Python-native data engine to build an end-to-end analytical pipeline. We start by loading a real-world MNIST dataset, then progressively transform it using UDFs, feature engineering, aggregations, joins, and lazy execution. Also, we demonstrate how to seamlessly combine structured data processing, numerical computation,…

Photos taken

Places visited

Contests

Enrolled people