Using NotebookLM as Your Machine Learning Study Guide

Learning machine learning can be challenging. Source link

Learning machine learning can be challenging. Source link

In machine learning model development, feature engineering plays a crucial role since real-world data often comes with noise, missing values, skewed distributions, and even inconsistent formats. Source link

Machine learning model development often feels like navigating a maze, exciting but filled with twists, dead ends, and time sinks. Source link

This post is divided into five parts; they are: • Naive Tokenization • Stemming and Lemmatization • Byte-Pair Encoding (BPE) • WordPiece • SentencePiece and Unigram The simplest form of tokenization splits text into tokens based on whitespace. Source link

Quantization is a frequently used strategy applied to production machine learning models, particularly large and complex ones, to make them lightweight by reducing the numerical precision of the model’s parameters (weights) — usually from 32-bit floating-point to lower representations like…

Machine learning models have become increasingly sophisticated, but this complexity often comes at the cost of interpretability. Source link

This post is divided into three parts; they are: • Understanding Word Embeddings • Using Pretrained Word Embeddings • Training Word2Vec with Gensim • Training Word2Vec with PyTorch • Embeddings in Transformer Models Word embeddings represent words as dense vectors…

Feature engineering is a key process in most data analysis workflows, especially when constructing machine learning models. Source link

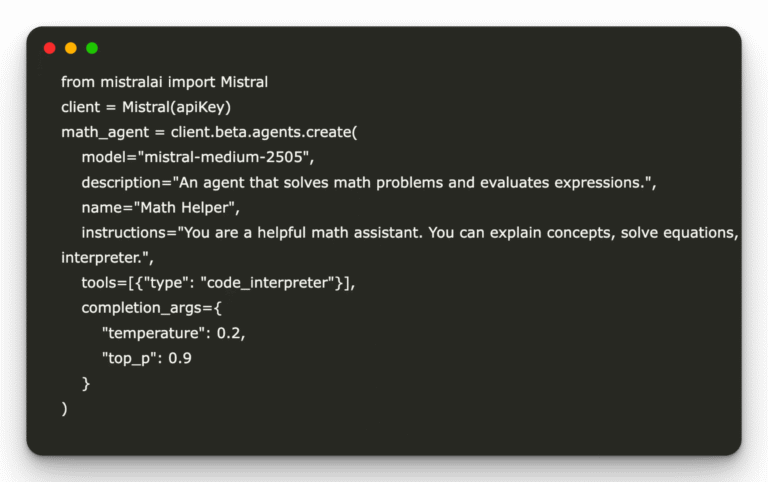

The Mistral Agents API enables developers to create smart, modular agents equipped with a wide range of capabilities. Key features include: Support for a variety of multimodal models, covering both text and image-based interactions. Conversation memory, allowing agents to retain…

Modern software engineering faces growing challenges in accurately retrieving and understanding code across diverse programming languages and large-scale codebases. Existing embedding models often struggle to capture the deep semantics of code, resulting in poor performance in tasks such as code…