New tool evaluates progress in reinforcement learning | MIT News

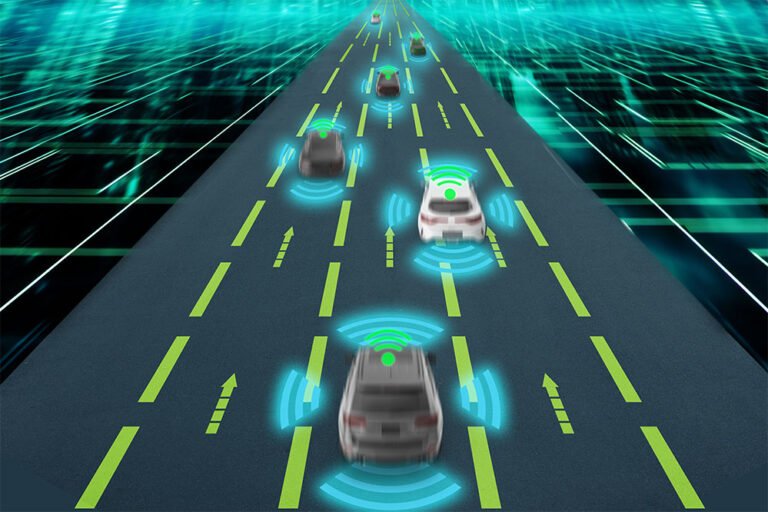

If there’s one thing that characterizes driving in any major city, it’s the constant stop-and-go as traffic lights change and as cars and trucks merge and separate and turn and park. This constant stopping and starting is extremely inefficient, driving…