Everything You Need to Know About How Python Manages Memory

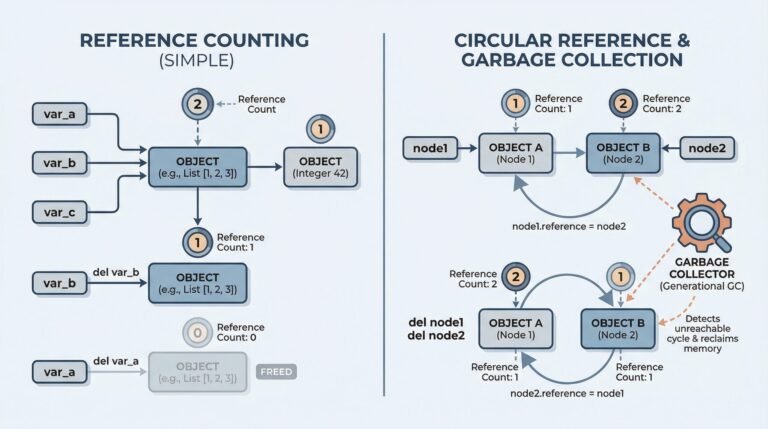

In this article, you will learn how Python allocates, tracks, and reclaims memory using reference counting and generational garbage collection, and how to inspect this behavior with the gc module. Topics we will cover include: The role of references and…