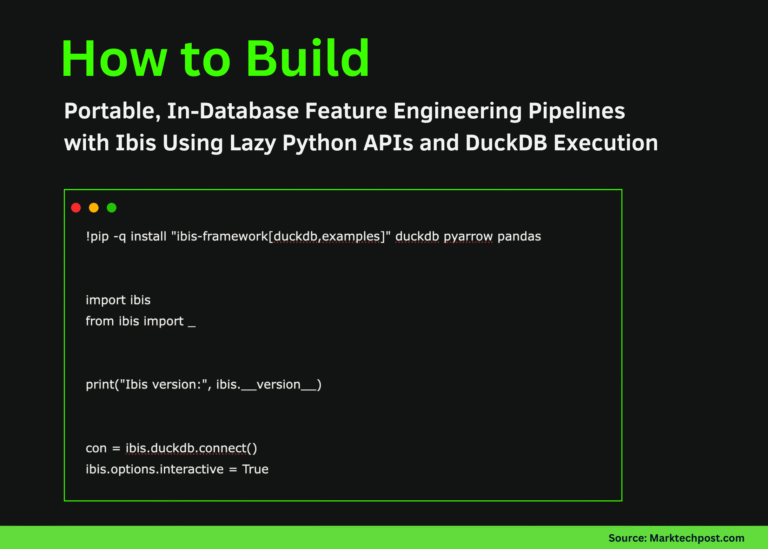

How to Build Portable, In-Database Feature Engineering Pipelines with Ibis Using Lazy Python APIs and DuckDB Execution

In this tutorial, we demonstrate how we use Ibis to build a portable, in-database feature engineering pipeline that looks and feels like Pandas but executes entirely inside the database. We show how we connect to DuckDB, register data safely inside…