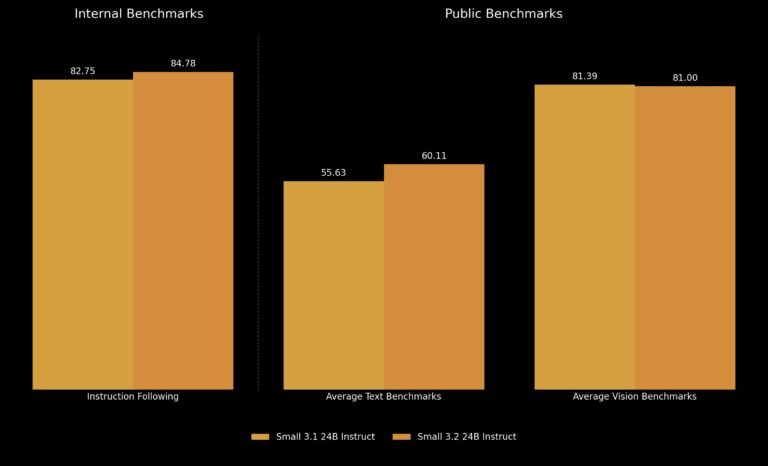

Mistral AI Releases Mistral Small 3.2: Enhanced Instruction Following, Reduced Repetition, and Stronger Function Calling for AI Integration

With the frequent release of new large language models (LLMs), there is a persistent quest to minimize repetitive errors, enhance robustness, and significantly improve user interactions. As AI models become integral to more sophisticated computational tasks, developers are consistently refining…