7 Important Considerations Before Deploying Agentic AI in Production

The promise of agentic AI is compelling: autonomous systems that reason, plan, and execute complex tasks with minimal human intervention. Source link

The promise of agentic AI is compelling: autonomous systems that reason, plan, and execute complex tasks with minimal human intervention. Source link

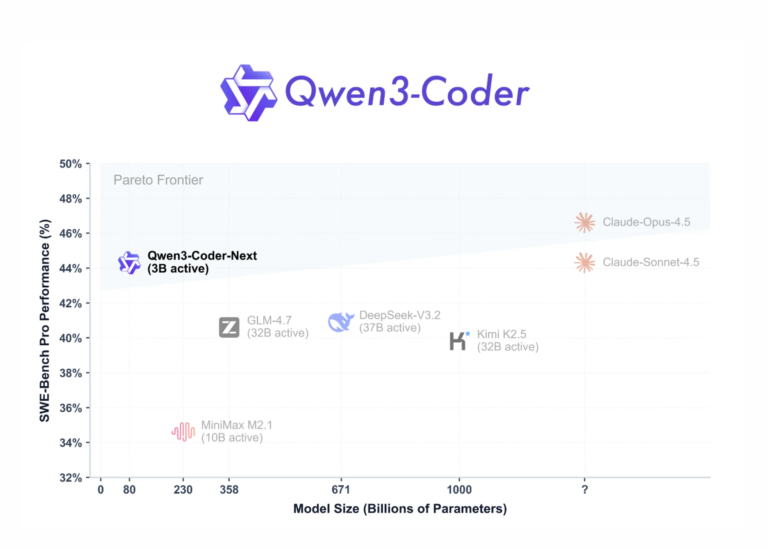

Qwen team has just released Qwen3-Coder-Next, an open-weight language model designed for coding agents and local development. It sits on top of the Qwen3-Next-80B-A3B backbone. The model uses a sparse Mixture-of-Experts (MoE) architecture with hybrid attention. It has 80B total…

The large language models (LLMs) hype wave shows no sign of fading anytime soon: after all, LLMs keep reinventing themselves at a rapid pace and transforming the industry as a whole. Source link

You have mastered model. Source link

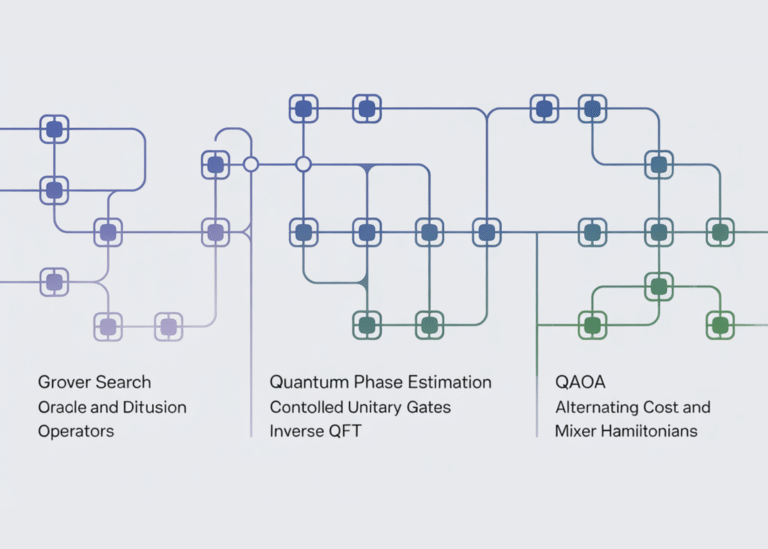

In this tutorial, we present an advanced, hands-on tutorial that demonstrates how we use Qrisp to build and execute non-trivial quantum algorithms. We walk through core Qrisp abstractions for quantum data, construct entangled states, and then progressively implement Grover’s search…

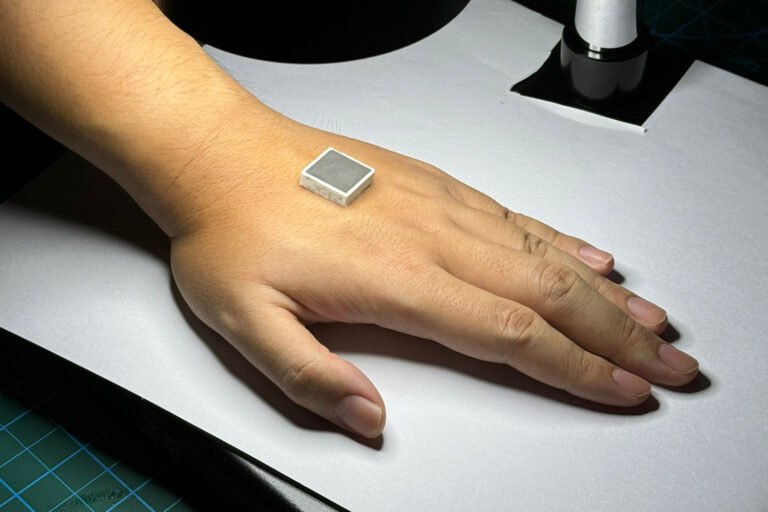

What if ultrasound imaging is no longer confined to hospitals? Patients with chronic conditions, such as hypertension and heart failure, could be monitored continuously in real-time at home or on the move, giving health care practitioners ongoing clinical insights instead…

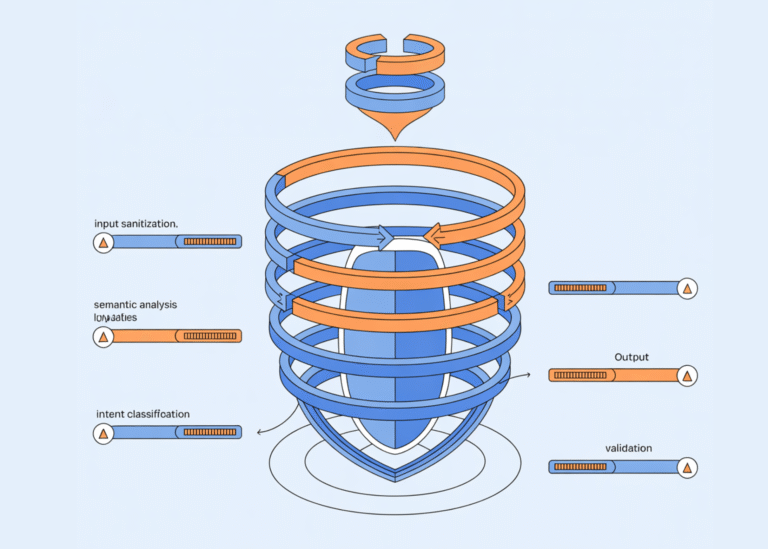

In this tutorial, we build a robust, multi-layered safety filter designed to defend large language models against adaptive and paraphrased attacks. We combine semantic similarity analysis, rule-based pattern detection, LLM-driven intent classification, and anomaly detection to create a defense system…

Google has introduced Conductor, an open source preview extension for Gemini CLI that turns AI code generation into a structured, context driven workflow. Conductor stores product knowledge, technical decisions, and work plans as versioned Markdown inside the repository, then drives…

What is Zero Padding Zero padding is a technique used in convolutional neural networks where additional pixels with a value of zero are added around the borders of an image. This allows convolutional kernels to slide over edge pixels and…

Generative artificial intelligence models have been used to create enormous libraries of theoretical materials that could help solve all kinds of problems. Now, scientists just have to figure out how to make them. In many cases, materials synthesis is not…