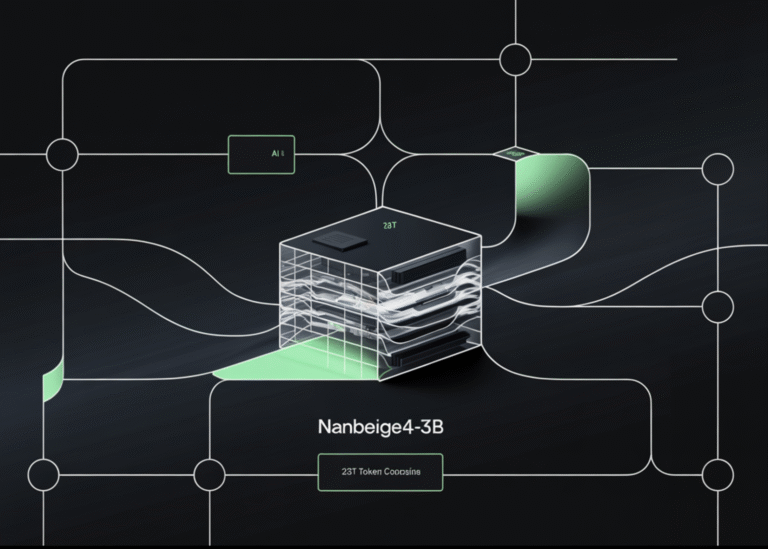

Nanbeige4-3B-Thinking: How a 23T Token Pipeline Pushes 3B Models Past 30B Class Reasoning

Can a 3B model deliver 30B class reasoning by fixing the training recipe instead of scaling parameters? Nanbeige LLM Lab at Boss Zhipin has released Nanbeige4-3B, a 3B parameter small language model family trained with an unusually heavy emphasis on…