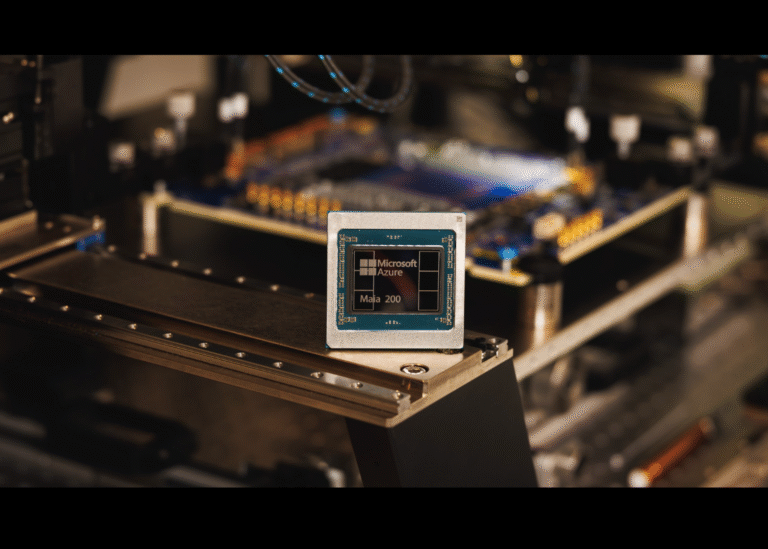

Microsoft Unveils Maia 200, An FP4 and FP8 Optimized AI Inference Accelerator for Azure Datacenters

Maia 200 is Microsoft’s new in house AI accelerator designed for inference in Azure datacenters. It targets the cost of token generation for large language models and other reasoning workloads by combining narrow precision compute, a dense on chip memory…