Teaching AI models what they don’t know | MIT News

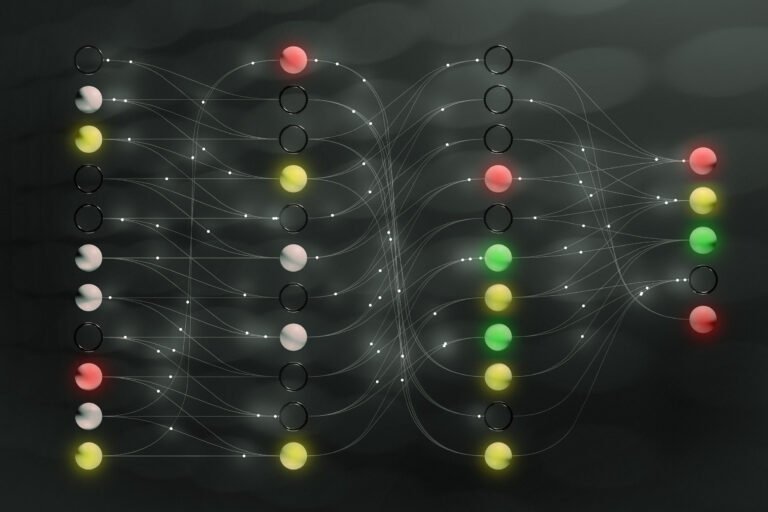

Artificial intelligence systems like ChatGPT provide plausible-sounding answers to any question you might ask. But they don’t always reveal the gaps in their knowledge or areas where they’re uncertain. That problem can have huge consequences as AI systems are increasingly…