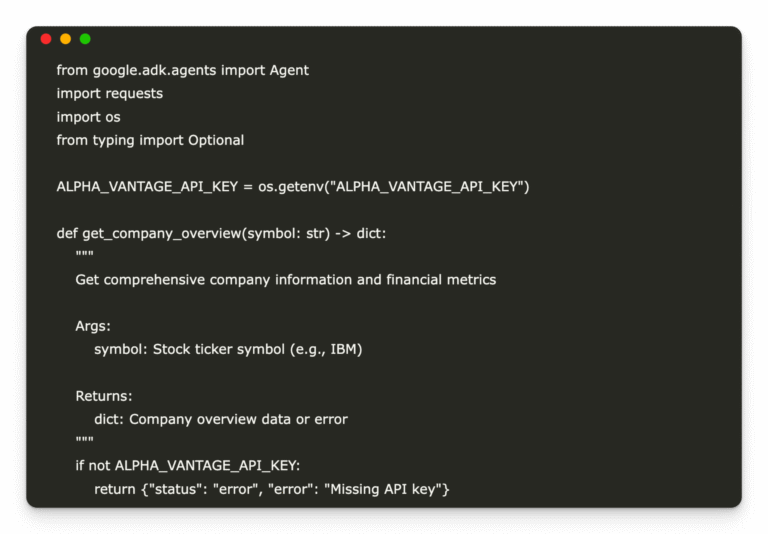

Step-by-Step Guide to Create an AI agent with Google ADK

Agent Development Kit (ADK) is an open-source Python framework that helps developers build, manage, and deploy multi-agent systems. It’s designed to be modular and flexible, making it easy to use for both simple and complex agent-based applications. In this tutorial,…