Building the Internet of Agents: A Technical Dive into AI Agent Protocols and Their Role in Scalable Intelligence Systems

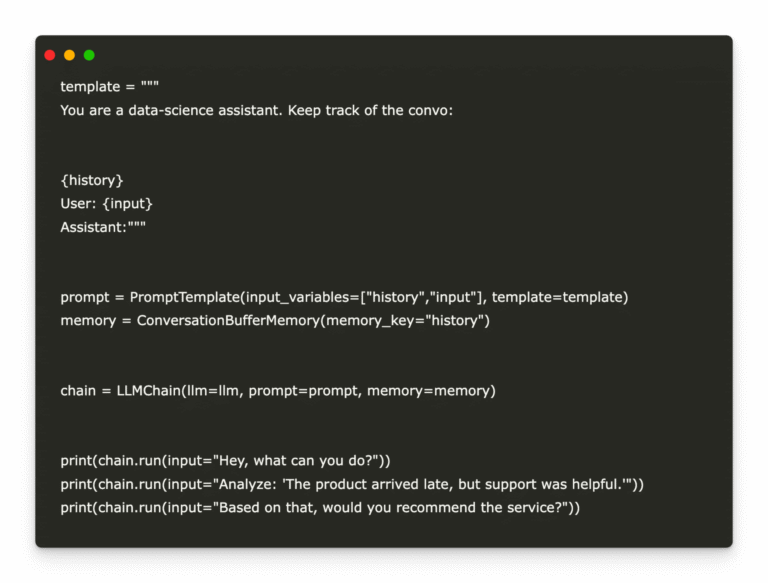

As large language model (LLM) agents gain traction across enterprise and research ecosystems, a foundational gap has emerged: communication. While agents today can autonomously reason, plan, and act, their ability to coordinate with other agents or interface with external tools…