Sports

A Coding Implementation to Train Safety-Critical Reinforcement Learning Agents Offline Using Conservative Q-Learning with d3rlpy and Fixed Historical Data

In this tutorial, we build a safety-critical reinforcement learning pipeline that learns entirely from fixed, offline data rather than live exploration. We design a custom environment, generate a behavior dataset from a constrained policy, and then train both a Behavior Cloning baseline and a Conservative Q-Learning agent using d3rlpy. By structuring the workflow around…

Counter intelligence | MIT News

How can artificial intelligence step out of a screen and become something we can physically touch and interact with?That question formed the foundation of class 4.043/4.044 (Interaction Intelligence), an MIT course focused on designing a new category of AI-driven interactive objects. Known as large language objects (LLOs), these physical interfaces extend large language models…

5 Ways to Use Cross-Validation to Improve Time Series Models

Time series modeling <a href="https://machinelearningmastery. Source link

Katie Spivakovsky wins 2026 Churchill Scholarship | MIT News

MIT senior Katie Spivakovsky has been selected as a 2026-27 Churchill Scholar and will undertake an MPhil in biological sciences at the Wellcome Sanger Institute at Cambridge University in the U.K. this fall.Spivakovsky, who is double-majoring in biological engineering and artificial intelligence, with minors in mathematics and biology, aims to integrate computation and bioengineering…

7 Important Considerations Before Deploying Agentic AI in Production

The promise of agentic AI is compelling: autonomous systems that reason, plan, and execute complex tasks with minimal human intervention. Source link

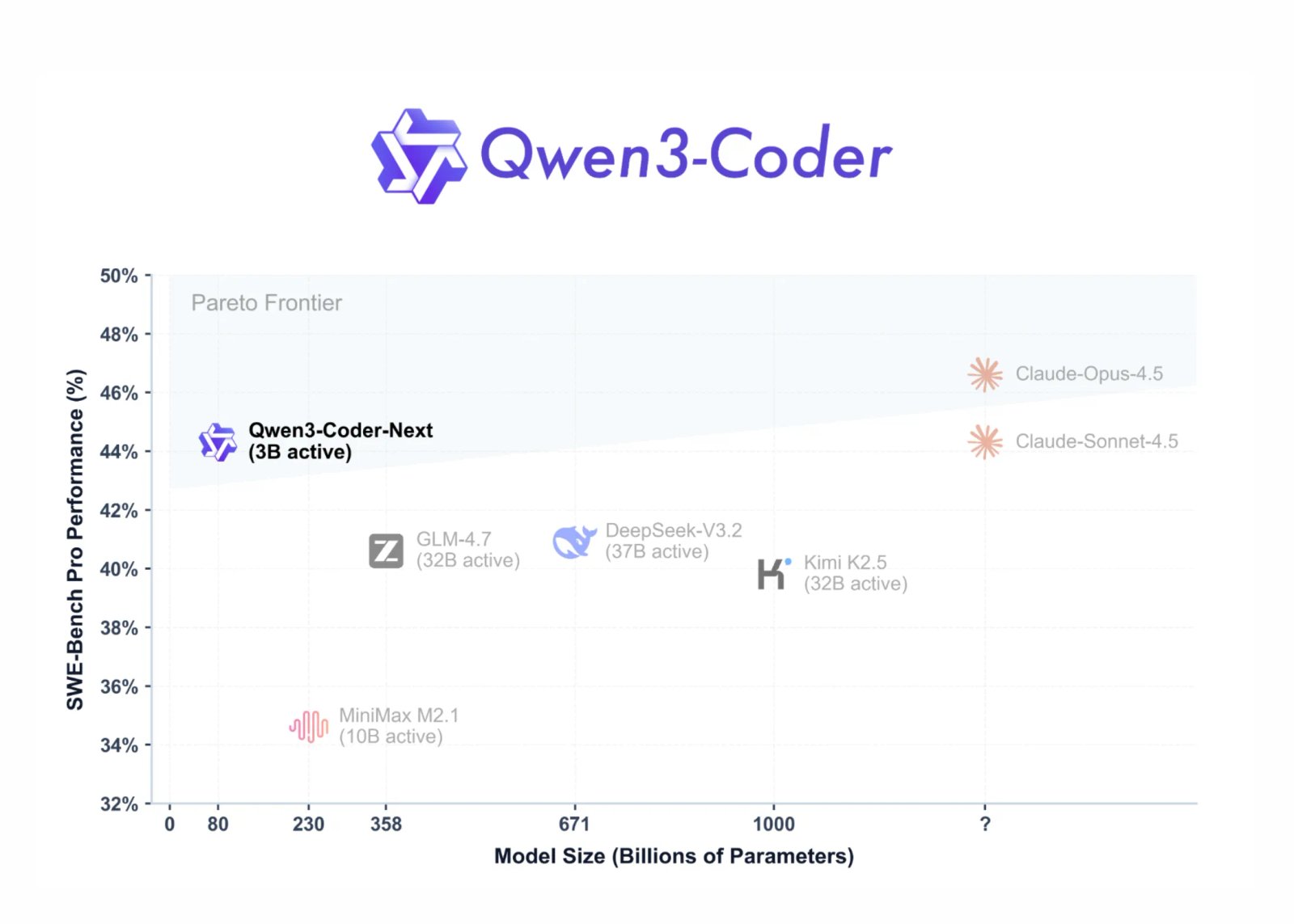

Qwen Team Releases Qwen3-Coder-Next: An Open-Weight Language Model Designed Specifically for Coding Agents and Local Development

Qwen team has just released Qwen3-Coder-Next, an open-weight language model designed for coding agents and local development. It sits on top of the Qwen3-Next-80B-A3B backbone. The model uses a sparse Mixture-of-Experts (MoE) architecture with hybrid attention. It has 80B total parameters, but only 3B parameters are activated per token. The goal is to match…